Catégorie : Deep Learning

-

Make-A-Video

Make-A-Video research builds on the recent progress made in text-to-image generation technology built to enable text-to-video generation. The system uses images with descriptions to learn what the world looks like and how it is often described. It also uses unlabeled videos to learn how the world moves. With this data, Make-A-Video lets you bring your…

-

1907.10903 DropEdge: Towards Deep Graph Convolutional Networks on Node Classification

https://arxiv.org/abs/1907.10903

-

Using Deepchecks to Instantly Evaluate ML Models | Towards Data Science

https://towardsdatascience.com/the-newest-package-for-instantly-evaluating-ml-models-deepchecks-d478e1c20d04

-

GeLU activation function – On the Impact of the Activation Function on Deep Neural Networks Training

https://arxiv.org/abs/1902.06853 [1902.06853] On the Impact of the Activation Function on Deep Neural Networks Training The weight initialization and the activation function of deep neural networks have a crucial impact on the performance of the training procedure. An inappropriate selection can lead to the loss of information of the input during forward propagation and the exponential…

-

The birth of an important discovery in deep clustering | by Giansalvo Cirrincione | Dec, 2021 | Towards Data Science

https://towardsdatascience.com/the-birth-of-an-important-discovery-in-deep-clustering-c2791f2f2d82

-

The birth of an important discovery in deep clustering | by Giansalvo Cirrincione | Dec, 2021 | Towards Data Science

https://towardsdatascience.com/the-birth-of-an-important-discovery-in-deep-clustering-c2791f2f2d82

-

AlphaStar: Grandmaster level in StarCraft II using multi-agent reinforcement learning | DeepMind

https://deepmind.com/blog/article/AlphaStar-Grandmaster-level-in-StarCraft-II-using-multi-agent-reinforcement-learning

-

Deep Neural Networks and Tabular Data: A Survey

Heterogeneous tabular data are the most commonly used form of data and are essential for numerous critical and computationally demanding applications. On homogeneous data sets, deep neural networks have repeatedly shown excellent performance and have therefore been widely adopted. However, their application to modeling tabular data (inference or generation) remains highly challenging. This work provides…

-

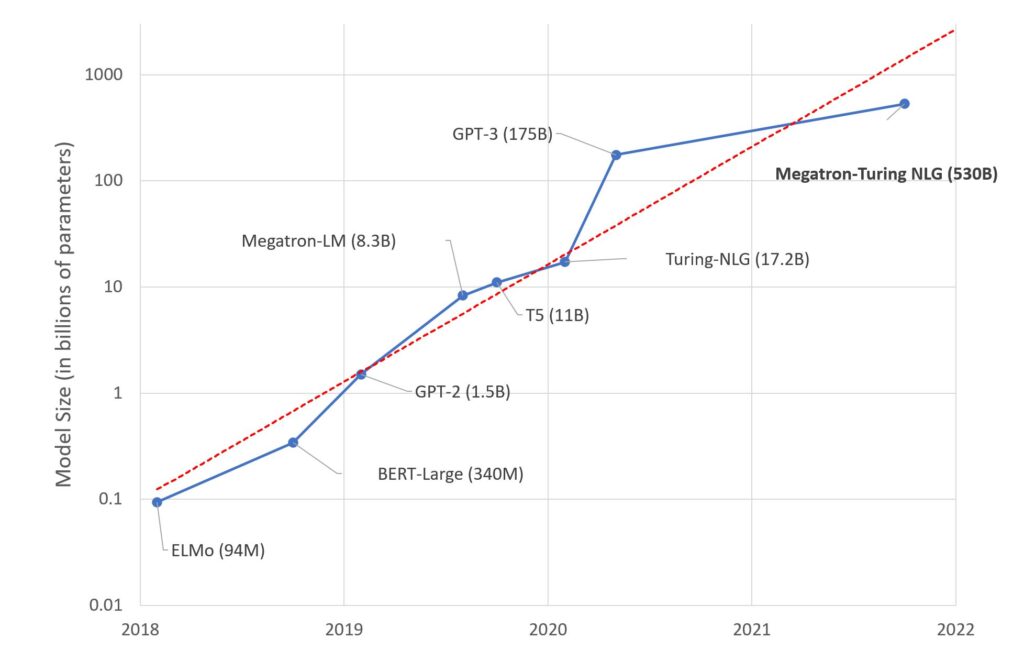

Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, the World’s Largest and Most Powerful Generative Language Model – Microsoft Research

Published October 11, 2021 By Ali Alvi , Group Program Manager (Microsoft Turing) Paresh Kharya , Senior Director of Product Management, Accelerated Computing, NVIDIA Research Area We are excited to introduce the DeepSpeed- and Megatron-powered Megatron-Turing Natural Language Generation model (MT-NLG), the largest and the most powerful monolithic transformer language model trained to date, with…

-

Unsupervised Deep Video Denoising

https://sreyas-mohan.github.io/udvd/ Unsupervised Deep Video Denoising Real Microscopy Videos Denoised Using UDVD UDVD trained on real microscopy datasets.. Deep convolutional neural networks (CNNs) currently achieve state-of-the-art performance in denoising videos. sreyas-mohan.github.io

-

WarpDrive: Extremely Fast End-to-End Deep Multi-Agent Reinforcement Learning on a GPU

https://github.com/salesforce/warp-drive GitHub – salesforce/warp-drive WarpDrive is a flexible, lightweight, and easy-to-use open-source reinforcement learning (RL) framework that implements end-to-end multi-agent RL on a single GPU (Graphics Processing Unit). Using the extreme parallelization capability of GPUs, WarpDrive enables orders-of-magnitude faster RL compared … github.com

-

The Machine & Deep Learning Compendium

https://github.com/orico/www.mlcompendium.com/

-

GitHub – labmlai/annotated_deep_learning_paper_implementations: 🧑🏫 Implementation s/tutorials of deep learning papers with side-by-side notes 📝; including transformers ( original, xl, switch, feedback, vit), optimizers (adam, radam, adabelief), gans(…

https://github.com/labmlai/annotated_deep_learning_paper_implementations

-

Open-Ended Learning Leads to Generally Capable Agents | DeepMind

https://deepmind.com/research/publications/open-ended-learning-leads-to-generally-capable-agents

-

Deep learning on graph for nlp

https://drive.google.com/file/d/1A9Gtzyan4tqFTgmNsNfwOkO4ELR77iNh/view

-

An introduction to Recommendation Systems: an overview of machine and deep learning architectures

https://theaisummer.com/recommendation-systems An introduction to Recommendation Systems: an overview of machine and deep learning architectures | AI Summer Learn about the SOTA recommender system models. From collaborative filtering and factorization machines to DCN and DLRM theaisummer.com

-

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression – Microsoft Research

https://www.microsoft.com/en-us/research/blog/deepspeed-accelerating-large-scale-model-inference-and-training-via-system-optimizations-and-compression/

-

Do Wide and Deep Networks Learn the Same Things?

https://ai.googleblog.com/2021/05/do-wide-and-deep-networks-learn-same.html Google AI Blog: Do Wide and Deep Networks Learn the Same Things? Posted by Thao Nguyen, AI Resident, Google Research. A common practice to improve a neural network’s performance and tailor it to available computational resources is to adjust the architecture depth and width.Indeed, popular families of neural networks, including EfficientNet, ResNet and Transformers,…

-

DeepMind, Microsoft, Allen AI & UW Researchers Convert Pretrained Transformers into RNNs, Lowering Memory Cost While Retaining High Accuracy | by Synced | SyncedReview | Apr, 2021 | Medium

https://medium.com/syncedreview/deepmind-microsoft-allen-ai-uw-researchers-convert-pretrained-transformers-into-rnns-lowering-806b94bf0521

-

DeepMoji

https://medium.com/@bjarkefelbo/what-can-we-learn-from-emojis-6beb165a5ea0 https://github.com/bfelbo/DeepMoji bfelbo/DeepMoji State-of-the-art deep learning model for analyzing sentiment, emotion, sarcasm etc. – bfelbo/DeepMoji github.com

-

Way beyond AlphaZero: Berkeley and Google work shows robotics may be the deepest machine learning of all | ZDNet

https://www.zdnet.com/article/way-beyond-alphazero-berkeley-and-google-work-shows-robotics-may-be-the-deepest-machine-learning-of-all/?utm_content=buffere4883&utm_medium=social&utm_source=twitter.com&utm_campaign=buffer

-

2001.09977 Towards a Human-like Open-Domain Chatbot

https://arxiv.org/abs/2001.09977

-

Satoshi Iizuka — DeepRemaster

http://iizuka.cs.tsukuba.ac.jp/projects/remastering/en/index.html

-

Top 4 libraries you must know before diving into any deep learning projects

https://medium.com/@abhisheksingh007226/top-4-libraries-you-must-know-before-diving-into-any-deep-learning-projects-61904286478d Top 4 libraries you must know before diving into any deep learning projects Libraries play a very important role in solving any problem. It makes our task easier. For example we can wrap image classification task… medium.com

-

https://www.microsoft.com/en-us/research/blog/turing-nlg-a-17-billion-parameter-language-model-by-microsoft/

https://www.microsoft.com/en-us/research/blog/turing-nlg-a-17-billion-parameter-language-model-by-microsoft/

-

Deep Forest: Towards An Alternative to Deep Neural Networks | IJCAI

https://www.ijcai.org/proceedings/2017/497

-

DeepGraphLearning/LiteratureDL4Graph

https://github.com/DeepGraphLearning/LiteratureDL4Graph

-

How a simple mix of object-oriented programming can sharpen your deep learning prototype

https://towardsdatascience.com/how-a-simple-mix-of-object-oriented-programming-can-sharpen-your-deep-learning-prototype-19893bd969bd

-

Which GPU(s) to Get for Deep Learning

https://timdettmers.com/2019/04/03/which-gpu-for-deep-learning/

-

GitHub – benedekrozemberczki/GEMSEC: The TensorFlow reference implementation of ‘GEMSEC: Graph Embedding with Self Clustering’ (ASONAM 2019).

https://github.com/benedekrozemberczki/GEMSEC

-

GitHub – ahmedbesbes/mrnet: Implementation of the paper: Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of MRNet

https://github.com/ahmedbesbes/mrnet?fbclid=IwAR3jrvdG4enmqm9SwkrPtNCXhsGAa_F7Ey6Fdn69qy1F2-CeD-wFBOg22Qk

-

Autocompletion with deep learning

https://tabnine.com/blog/deep

-

GitHub – eng-amrahmed/vanilla-gan-tf2: The Simplest and straightforward Tensorflow 2.0 implementation for vanilla GAN

https://github.com/eng-amrahmed/vanilla-gan-tf2

-

New AI programming language goes beyond deep learning | MIT News

http://news.mit.edu/2019/ai-programming-gen-0626