Published October 11, 2021

By Ali Alvi , Group Program Manager (Microsoft Turing) Paresh Kharya , Senior Director of Product Management, Accelerated Computing, NVIDIA

Research Area

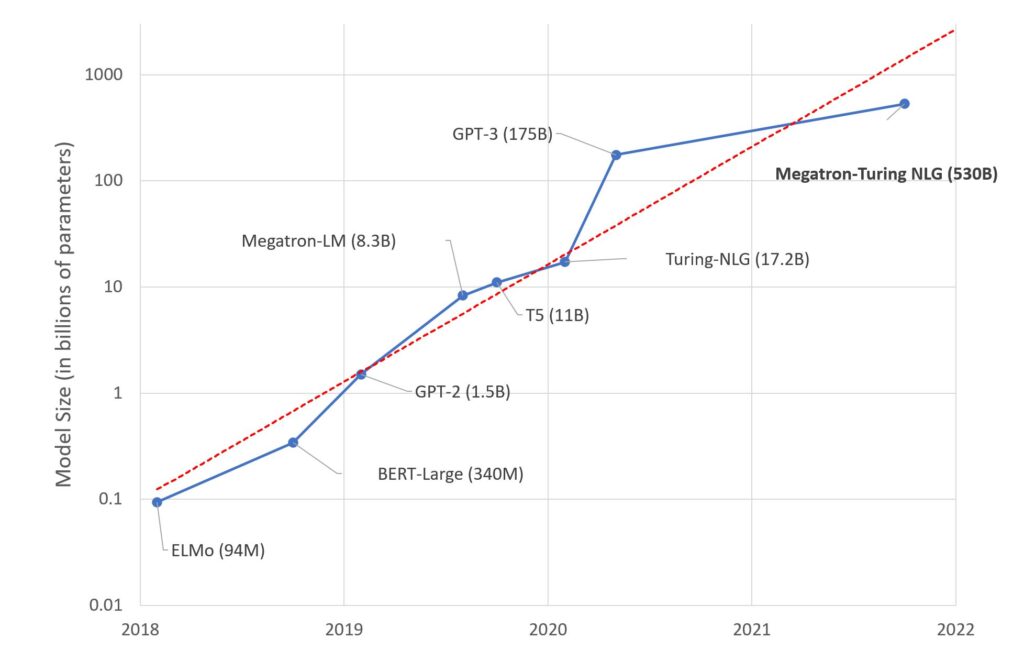

We are excited to introduce the DeepSpeed- and Megatron-powered Megatron-Turing Natural Language Generation model (MT-NLG), the largest and the most powerful monolithic transformer language model trained to date, with 530 billion parameters. It is the result of a research collaboration between Microsoft and NVIDIA to further parallelize and optimize the training of very large AI models.

As the successor to Turing NLG 17B and Megatron-LM, MT-NLG has 3x the number of parameters compared to the existing largest model of this type and demonstrates unmatched accuracy in a broad set of natural language tasks such as:

The 105-layer, transformer-based MT-NLG improved upon the prior state-of-the-art models in zero-, one-, and few-shot settings and set the new standard for large-scale language models in both model scale and quality.

Transformer-based language models in natural language processing (NLP) have driven rapid progress in recent years fueled by computation at scale, large datasets, and advanced algorithms and software to train these models.