Étiquette : Machine Learning

-

Machine Learning for Everyone

« Machine Learning is like sex in high school. Everyone is talking about it, a few know what to do, and only your teacher is doing it. If you ever tried to read articles about machine learning on the Internet, most likely you stumbled upon two types of them: thick academic trilogies filled with theorems (I…

-

Make-A-Video

Make-A-Video research builds on the recent progress made in text-to-image generation technology built to enable text-to-video generation. The system uses images with descriptions to learn what the world looks like and how it is often described. It also uses unlabeled videos to learn how the world moves. With this data, Make-A-Video lets you bring your…

-

GitHub – alexlenail/NN-SVG: Publication-ready NN-architecture schematics.

Illustrations of Neural Network architectures are often time-consuming to produce, and machine learning researchers all too often find themselves constructing these diagrams from scratch by hand. https://github.com/alexlenail/NN-SVG

-

The history of Amazon’s forecasting algorithm

Amazon’s forecasting team has drawn on advances in deep learning, image recognition, and natural language processing to develop a forecasting model that makes accurate decisions across diverse product categories. That journey took more than a decade. https://www.amazon.science/latest-news/the-history-of-amazons-forecasting-algorithm

-

7 Considerations Before Pushing Machine Learning Models to Production

Being part of a company that values scalability, I daily see, as a data scientist, the challenges that come with putting AI-based solutions in production. These challenges are numerous and cover a variety of aspects: modeling and system design, data engineering, resource management, SLA, etc. https://towardsdatascience.com/7-considerations-before-pushing-machine-learning-models-to-production-efab64c4d433 7 Considerations Before Pushing Machine Learning Models to Production…

-

A brief timeline of NLP from Bag of Words to the Transformer family | by Fabio Chiusano | NLPlanet | Feb, 2022 | Medium

https://medium.com/nlplanet/a-brief-timeline-of-nlp-from-bag-of-words-to-the-transformer-family-7caad8bbba56

-

1907.10903 DropEdge: Towards Deep Graph Convolutional Networks on Node Classification

https://arxiv.org/abs/1907.10903

-

Feature Propagation is a simple and surprisingly efficient solution for learning on graphs with missing node features | by Michael Bronstein | Feb, 2022 | Towards Data Science

https://towardsdatascience.com/learning-on-graphs-with-missing-features-dd34be61b06

-

Bayesian Hyperparameter Optimization with tune-sklearn in PyCaret

https://medium.com/distributed-computing-with-ray/bayesian-hyperparameter-optimization-with-tune-sklearn-in-pycaret-a33b1592662f Bayesian Hyperparameter Optimization with tune-sklearn in PyCaret | by Antoni Baum | Distributed Computing with Ray – Medium Here’s a situation every PyCaret user is familiar with: after selecting a promising model or two from compare_models(), it’s time to tune its hyperparameters to squeeze out all of the model’s… medium.com

-

Google & J.P. Morgan Propose Advanced Bandit Sampling for Multiplex Networks

https://syncedreview.com/2022/02/10/deepmind-podracer-tpu-based-rl-frameworks-deliver-exceptional-performance-at-low-cost-203/ Google & J.P. Morgan Propose Advanced Bandit Sampling for Multiplex Networks | Synced Graph neural networks (GNNs) have gained popularity in the AI research community due to their impressive performance in high-impact applications such as drug discovery and social network analyses. Most existing studies on GNNs however have focused on "monoplex" settings (networks with…

-

Improve high-value research with Hugging Face and Amazon SageMaker asynchronous inference endpoints | AWS Machine Learning Blog

https://aws.amazon.com/fr/blogs/machine-learning/improve-high-value-research-with-hugging-face-and-amazon-sagemaker-asynchronous-inference-endpoints/

-

yzpang/gold-off-policy-text-gen-iclr21

https://github.com/yzpang/gold-off-policy-text-gen-iclr21

-

28 January, 2022 07:19

https://medium.com/@anushkhabajpai/mlops-best-resources-340b69615df2

-

Using Deepchecks to Instantly Evaluate ML Models | Towards Data Science

https://towardsdatascience.com/the-newest-package-for-instantly-evaluating-ml-models-deepchecks-d478e1c20d04

-

Hugging Face Tasks

Hugging Face is the home for all Machine Learning tasks. Here you can find what you need to get started with a task: demos, use cases, models, datasets, and more! https://huggingface.co/tasks

-

GeLU activation function – On the Impact of the Activation Function on Deep Neural Networks Training

https://arxiv.org/abs/1902.06853 [1902.06853] On the Impact of the Activation Function on Deep Neural Networks Training The weight initialization and the activation function of deep neural networks have a crucial impact on the performance of the training procedure. An inappropriate selection can lead to the loss of information of the input during forward propagation and the exponential…

-

graviraja/MLOps-Basics

https://github.com/graviraja/MLOps-Basics

-

Implement Your Own Music Recommender with Graph Neural Networks (LightGCN)

https://medium.com/@benalex/implement-your-own-music-recommender-with-graph-neural-networks-lightgcn-f59e3bf5f8f5 Implement Your Own Music Recommender with Graph Neural Networks (LightGCN) By Ben Alexander, Jean-Peic Chou, and Aman Bansal for Stanford CS224W. medium.com

-

NVIDIA is offering a four-hour, self-paced course on MLOps

https://analyticsindiamag.com/nvidia-is-offering-a-four-hour-self-paced-course-on-mlops/

-

Yale University and IBM Researchers Introduce Kernel Graph Neural Networks (KerGNNs) – MarkTechPost

https://www.marktechpost.com/2022/01/07/yale-university-and-ibm-researchers-introduce-kernel-graph-neural-networks-kergnns/

-

3 January, 2022 23:05

https://towardsdatascience.com/machine-learning-in-sql-using-pycaret-87aff377d90c

-

The birth of an important discovery in deep clustering | by Giansalvo Cirrincione | Dec, 2021 | Towards Data Science

https://towardsdatascience.com/the-birth-of-an-important-discovery-in-deep-clustering-c2791f2f2d82

-

End-to-End AutoML Pipeline with H2O AutoML, MLflow, FastAPI, and Streamlit | by Kenneth Leung | Dec, 2021 | Towards Data Science

https://towardsdatascience.com/end-to-end-automl-train-and-serve-with-h2o-mlflow-fastapi-and-streamlit-5d36eedfe606

-

Hugging Face Transformers with Keras: Fine-tune a non-English BERT for Named Entity Recognition

https://www.philschmid.de/huggingface-transformers-keras-tf

-

Microsoft & GitHub on Git-Based CI / CD for Machine Learning & MLOps | Iguazio

https://www.iguazio.com/sessions/git-based-ci-cd-for-machine-learning-mlops/?utm_campaign=LI_LeadAds_CI_CD_OnDemandWebinar4&utm_source=linkedin&utm_medium=paidsocial&li_fat_id=f15c53aa-870b-4744-b589-8a9a2dd03022

-

Introduction to Clustering in Python with PyCaret | by Moez Ali | Nov, 2021 | Towards Data Science

https://towardsdatascience.com/introduction-to-clustering-in-python-with-pycaret-5d869b9714a3

-

NorskRegnesentral/skweak: skweak: A software toolkit for weak supervision applied to NLP tasks

https://github.com/NorskRegnesentral/skweak

-

NorskRegnesentral/skweak: skweak: A software toolkit for weak supervision applied to NLP tasks

https://github.com/NorskRegnesentral/skweak

-

Open source NLP is fueling a new wave of startups

https://venturebeat-com.cdn.ampproject.org/c/s/venturebeat.com/2021/12/23/open-source-nlp-is-fueling-a-new-wave-of-startups/amp/ Open source NLP is fueling a new wave of startups A growing number of startups are offering open source language models as a service, competing with heavyweights like OpenAI. venturebeat-com.cdn.ampproject.org

-

Fine-Tuning Bert for Tweets Classification ft. Hugging Face | by Rajan Choudhary | Dec, 2021 | Medium

https://codistro.medium.com/fine-tuning-bert-for-tweets-classification-ft-hugging-face-8afebadd5dbf

-

New Serverless Transformers using Amazon SageMaker Serverless Inference and Hugging Face

https://www.philschmid.de/serverless-transformers-sagemaker-huggingface New Serverless Transformers using Amazon SageMaker Serverless Inference and Hugging Face Amazon SageMaker Serverless Inference. Amazon SageMaker Serverless Inference is a fully managed serverless inference option that makes it easy for you to deploy and scale ML models built on top of AWS Lambda and fully integrated into the Amazon SageMaker service. Serverless Inference…

-

Advanced NLP with spaCy

https://spacy.io/universe/project/spacy-course

-

ZenML

https://zenml.io/ ZenML – Reproducible Open-Source MLOps | ZenML ZenML is the open-source MLOps framework for reproducible ML pipelines and production-ready Machine Learning. zenml.io

-

Hugging Face Transformers with Keras: Fine-tune a non-English BERT for Named Entity Recognition

https://www.philschmid.de/huggingface-transformers-keras-tf

-

End-to-End AutoML Pipeline with H2O AutoML, MLflow, FastAPI, and Streamlit | by Kenneth Leung | Dec, 2021 | Towards Data Science

https://towardsdatascience.com/end-to-end-automl-train-and-serve-with-h2o-mlflow-fastapi-and-streamlit-5d36eedfe606

-

Interpretable_Text_Classification_And_Clustering – a Hugging Face Space by Hellisotherpeople

https://huggingface.co/spaces/Hellisotherpeople/Interpretable_Text_Classification_And_Clustering

-

The birth of an important discovery in deep clustering | by Giansalvo Cirrincione | Dec, 2021 | Towards Data Science

https://towardsdatascience.com/the-birth-of-an-important-discovery-in-deep-clustering-c2791f2f2d82

-

Google AI Blog: Training Machine Learning Models More Efficiently with Dataset Distillation

https://ai.googleblog.com/2021/12/training-machine-learning-models-more.html?m=1

-

d4data/bias-detection-model · Hugging Face

https://huggingface.co/d4data/bias-detection-model

-

Clustering sentence embeddings to identify intents in short text

The unsupervised learning problem of clustering short-text messages can be turned into a constrained optimization problem to automatically tune UMAP + HDBSCAN hyperparameters. The chatintents package makes it easy to implement this tuning process. User dialogue interactions can be a tremendous source of informati on on how to improve products or services. Understanding why people…

-

AlphaStar: Grandmaster level in StarCraft II using multi-agent reinforcement learning | DeepMind

https://deepmind.com/blog/article/AlphaStar-Grandmaster-level-in-StarCraft-II-using-multi-agent-reinforcement-learning

-

Deep Neural Networks and Tabular Data: A Survey

Heterogeneous tabular data are the most commonly used form of data and are essential for numerous critical and computationally demanding applications. On homogeneous data sets, deep neural networks have repeatedly shown excellent performance and have therefore been widely adopted. However, their application to modeling tabular data (inference or generation) remains highly challenging. This work provides…

-

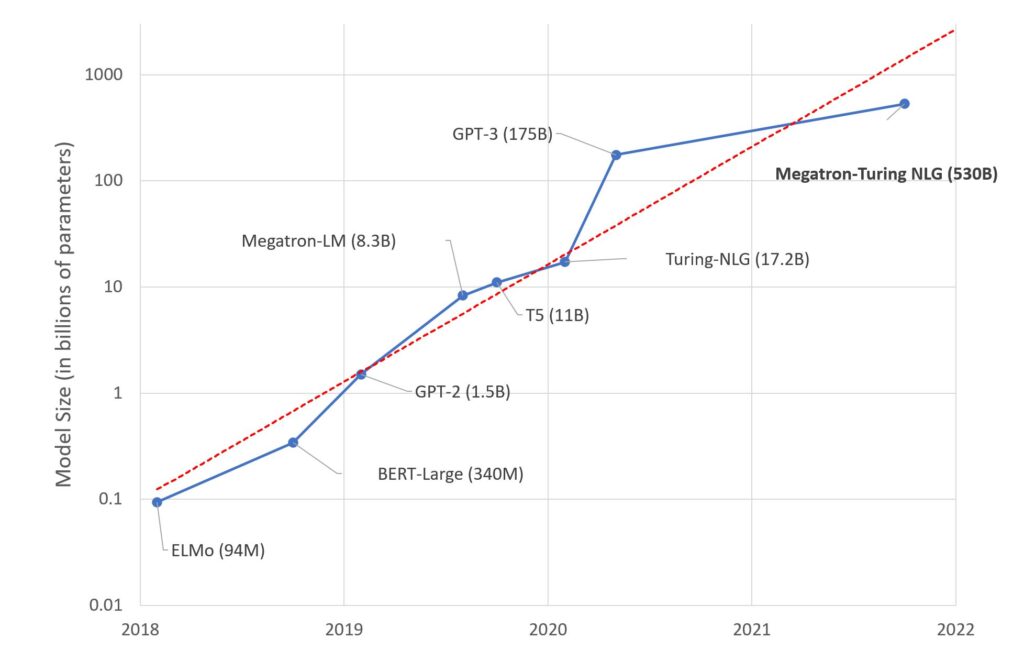

Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, the World’s Largest and Most Powerful Generative Language Model – Microsoft Research

Published October 11, 2021 By Ali Alvi , Group Program Manager (Microsoft Turing) Paresh Kharya , Senior Director of Product Management, Accelerated Computing, NVIDIA Research Area We are excited to introduce the DeepSpeed- and Megatron-powered Megatron-Turing Natural Language Generation model (MT-NLG), the largest and the most powerful monolithic transformer language model trained to date, with…

-

Google’s Zero-Label Language Learning Achieves Results Competitive With Supervised Learning

While contemporary deep learning models continue to achieve outstanding results across a wide range of tasks, these models are known to have huge data appetites. The emergence of large-scale pretrained language models such as Open AI’s GPT-3 has helped reduce the need for task-specific labelled data in natural language processing… https://medium.com/syncedreview/googles-zero-label-language-learning-achieves-results-competitive-with-supervised-learning-e6dbd984d0e1

-

WIT (Wikipedia-based Image Text) Dataset

WIT (Wikipedia-based Image Text) Dataset is a large multimodal multilingual dataset comprising 37M+ image-text sets with 11M+ unique images across 100+ languages. Wikipedia-based Image Text (WIT) Dataset is a large multimodal multilingual dataset. WIT is composed of a curated set of 37.6 million entity rich image-text examples with 11.5 million unique images across 108 Wikipedia…

-

Introduction to MLOps for Data Science

https://pub.towardsai.net/introduction-to-mlops-for-data-science-e2ca5a759f68

-

Unsupervised Deep Video Denoising

https://sreyas-mohan.github.io/udvd/ Unsupervised Deep Video Denoising Real Microscopy Videos Denoised Using UDVD UDVD trained on real microscopy datasets.. Deep convolutional neural networks (CNNs) currently achieve state-of-the-art performance in denoising videos. sreyas-mohan.github.io

-

WarpDrive: Extremely Fast End-to-End Deep Multi-Agent Reinforcement Learning on a GPU

https://github.com/salesforce/warp-drive GitHub – salesforce/warp-drive WarpDrive is a flexible, lightweight, and easy-to-use open-source reinforcement learning (RL) framework that implements end-to-end multi-agent RL on a single GPU (Graphics Processing Unit). Using the extreme parallelization capability of GPUs, WarpDrive enables orders-of-magnitude faster RL compared … github.com